Supported cluster types

BigAnimal supports three cluster types:

- Single node

- Standard high availability

- Extreme high availability (beta)

You choose which type of cluster you want on the Create Cluster page in the BigAnimal portal.

Postgres distribution and version support varies by cluster type.

| Postgres distribution | Versions | Cluster type |

|---|---|---|

| PostgreSQL | 11–15 | Single node, high availability |

| Oracle Compatible | 11–15 | Single node, high availability |

| Oracle Compatible | 12–15 | Extreme high availability |

| PostgreSQL Compatible | 12-15 | Extreme high availability |

Single node

For nonproduction use cases where high availability is not a primary concern, a cluster deployment with high availability not enabled provides one primary with no standby replicas for failover or read-only workloads.

In case of unrecoverable failure of the primary, a restore from a backup is required.

High availability

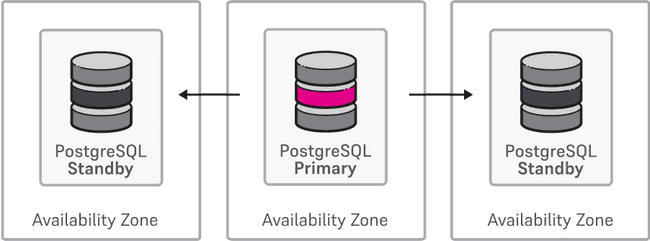

The high availability option is provided to minimize downtime in cases of failures. High-availability clusters—one primary and one or two standby replicas—are configured automatically, with standby replicas staying up to date through physical streaming replication.

If read-only workloads are enabled, then standby replicas serve the read-only workoads. In a two-node cluster, the single standby replica serves read-only workloads. In a three-node cluster, both standby replicas serve read-only workloads. The connections are made to the two standby replicas randomly and on a per-connection basis.

In cloud regions with availability zones, clusters are provisioned across zones to provide fault tolerance in the face of a datacenter failure.

In case of temporary or permanent unavailability of the primary, a standby replica becomes the primary.

Incoming client connections are always routed to the current primary. In case of failure of the primary, a standby replica is automatically promoted to primary, and new connections are routed to the new primary. When the old primary recovers, it rejoins the cluster as a standby replica.

Standby replicas

By default, replication is synchronous to one standby replica and asynchronous to the other. That is, one standby replica must confirm that a transaction record was written to disk before the client receives acknowledgment of a successful commit.

In a cluster with one primary and one replica (a two-node high availability cluster), you run the risk of the cluster being unavailable for writes because it doesn't have the same level of reliability as a three-node cluster. Note that BigAnimal automatically disables synchronous replication during maintenance operations of a two-node cluster to ensure write availability. You can also change from the default synchronous replication for a two-node cluster to asynchronous replication on a per-session/per-transaction basis.

In PostgreSQL terms, synchronous_commit is set to on and synchronous_standby_names is set to ANY 1 (replica-1, replica-2). You can modify this behavior on a per-transaction, per-session, per-user, or per-database basis with appropriate SET or ALTER commands.

To ensure write availability, BigAnimal disables synchronous replication during maintenance operations of a two-node cluster.

Since BigAnimal replicates to only one node synchronously, some standby replicas in three-node clusters might experience replication lag. Also, if you override the BigAnimal synchronous replication configuration, then the standby replicas are inconsistent.

Extreme high availability (beta)

Extreme high availability clusters are powered by EDB Postgres Distributed, a logical replication platform that delivers more advanced cluster management compared to a physical replication based system.

Extreme high availability clusters can be deployed with either PostgreSQL or Oracle compatibility.

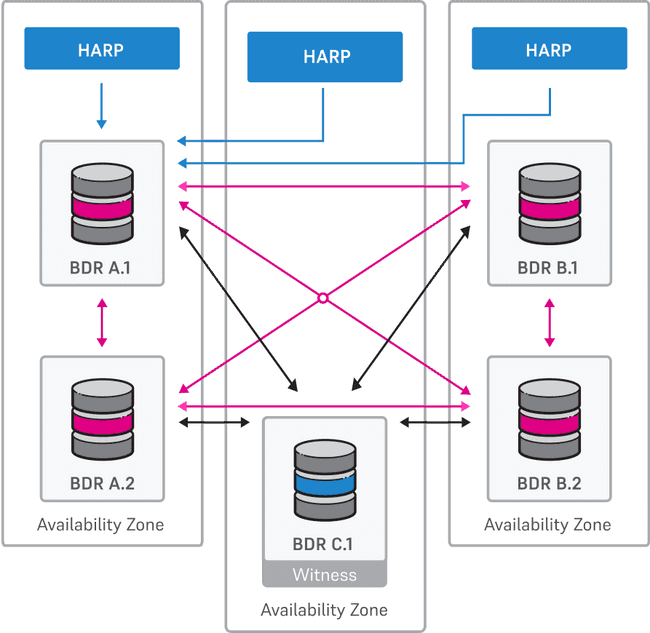

Extreme High Availability clusters deploy four data-hosting "BDR" nodes across two availability zones (A and B in the diagram below). One of these nodes will be the leader at any given time (A.1 in the diagram). The rest are typically referred to as "shadow" nodes. Any shadow node can be promoted to leadership at any time by the HARP router. The third availability zone (C) contains one node called the "witness". This node does not host data; it exists only for management purposes, to support operations that require consensus in case of an availability zone failure.

The EDB Postgres Distributed router (HARP) routes all application traffic to the leader node, which acts as the principal write target to reduce the potential for data conflicts. HARP leverages a distributed consensus model to determine availability of the BDR nodes in the cluster. On failure or unavailability of the leader, HARP elects a new leader and redirects application traffic. Together with the core capabilities of BDR, this mechanism of routing application traffic to the leader node enables fast failover and switchover without risk of data loss.